AI is a tool, not a mission

The AI backlash is growing just as some civic tech leaders are ramping up their cheerleading

As with any tech-related conference these days, the Code for America #CfASummit last week was filled to the brim with AI references and sessions. Most presenters heralded “AI” (which is, helpfully, whatever you need it to be) as a panacea—the lynchpin to solving all kinds of government and public service problems. Only two voices I heard offered a counterpoint, the most strident of which amounted to, “Well, we should probably be careful with this technology.”

A local conference in Columbus a week prior was similar: AI—without describing any specific, documented project or outcome—was going to change all our lives, jobs, and public service itself. (Although the message fell a little flat since it was delivered by a mid-level Deloitte drone hawking consulting contracts.)

Despite all the jazz-hands and prognostication at civic tech conferences, I remain skeptical. And I’m not alone. On May 31, just one day after Code for America made a splashy AI announcement, the Wall Street Journal (of all places) summarized a quiet alarm that has been rising in the shadows of the tech industry for the past 6 months or so:

Commentary on social media was, predictably, more pointed and a lot funnier:

My concerns with AI in the civic tech sector…

are not purely environmental, even though we’re discovering AI platforms burn far too much energy for their limited benefit

are not purely ethical, even though they may be used to eliminate jobs merely to turn billionaires into trillionaires

are not about the human biases injected into the platforms by data sets and developers, even though that could disenfranchise whole classes of people on a massive scale, and

are not about the factual errors the systems generate, which are totally predictable since AI doesn’t actually know anything.

Government is different

No, my chief concern is that some civic tech and government players are missing two key perspectives and they shouldn’t be moving so fast anyway.

First, nearly everyone misunderstands how LLMs and algorithmic analyses work, which leads to unrealistic expectations around what can be done with AI tech. Can AI help identify homes in Flint, MI that may have lead pipes? Yes, it can via algorithmic processing of prepared data sets (which may not even be “AI” anyway). AI can even help with limited language translations, with fairly high success rates for very basic communication. Indeed, processing text (especially in English) is what LLMs do best. But they can’t handle analyzing or representing facts or policies or generalized decisions because they don’t actually know anything. They can merely spot and/or generate data patterns.

Second, some leading-edge civic tech folks appear to have failed to learn a lesson the tech industry has been teaching for the past 30 years. Gartner explained it long ago with their Hype Cycle model:

The underlying point of the Hype Cycle: new technologies arrive on the scene, get attention far in excess of their value, and everyone jumps to a “this turns the world upside down” conclusion. Change instigated by the introduction of an innovation does take hold, but it’s never as fast or as intense as predicted.

And in government such changes take even longer. And they should! Government is not designed to extract maximum value for shareholders at maximum speed. We should be skeptical of rapid change because government must represent the needs and interests of everyone, and new tech never gets distributed evenly from the start. To this day, 30 years into the Internet “revolution,” broadband remains unevenly distributed and the only thing pushing it to the last mile in low-income areas are subsidies or direct government service. Indeed, our digital teams must consider how the public will get service if they don’t use the Internet.

AI : FOMO

So why is “AI” the hottest topic at civic tech conferences…

despite having very few proven use cases

despite being a clear example of the “peak of inflated expectations” and

despite government having a responsibility to slow down and include everyone?

I have to assume the biggest factor is FOMO (Fear of Missing Out). Everyone is talking about AI, the stock market is rewarding AI-related moves, and the AI (LLM) parlor tricks—available for free to anyone—are entertaining and impressive. Consulting shops are also pimping the tech to get the next wave of consulting dollars.

Plus, we’re public servants—if we see a way to boost public service without growing payroll, we’re gonna look into it. We get frustrated with the slow pace of change, too.

AI gets all the oxygen

This fixation on AI-derived solutions is absorbing all the oxygen in various conference sessions when we still have fundamentals to master.

We could dedicate more time to training our govtech colleagues in Human-Centered Design (HCD).

We could boost utilization of Agile development and deployment approaches.

We could teach Customer Experience (CX) and UX research methods and tools, all readily available today.

We could work on professionalizing training for end users, teaching Product Management concepts, sharing Lean examples, showing low-code tools governance… the list goes on.

Instead, we spend lots and lots of time dreaming of how AI will save us.

Hey, I’ve used AI tools! I know others in my organization have, too—some regularly and on the sly. It’s popular. But is it truly useful most of the time in most roles? Consider this observation from the WSJ article (boldface mine):

A recent survey conducted by Microsoft and LinkedIn found that three in four white-collar workers now use AI at work. Another survey… shows about a third of companies pay for at least one AI tool, up from 21% a year ago.

This suggests there is a massive gulf between the number of workers who are just playing with AI, and the subset who rely on it and pay for it. Microsoft’s AI Copilot, for example, costs $30 a month [per person].

We’re all playing at AI. But these are distractions. Consider…

There are 100,000 municipalities, 3,000 counties, 50 states, and seemingly countless federal agencies. Only a small fraction of them have organized themselves around HCD, CX, UX, Agile, Product Management, low-code solutions, and other modern approaches. Pennsylvania only launched CODE PA a year ago, and my own state of Ohio doesn’t even have a digital services team.

Indeed, our team’s use of the term “GX” (Government Experience) is a foreign idea to the vast majority of government entities out there. But the civic tech world is spending more time talking about the potential of AI rather than how to clean up our websites with plain language or standardize our data sets for good analysis or work on inter-agency process flows and data exchanges.

AI is getting all the oxygen. It’s leaving the rest of us dizzy from oxygen deprivation. And it’s not serving the public with the fundamentals.

Okay, so what do we do, smart guy?

I know. Being anything but an AI booster makes you sound like an AI crank. Like so many things in 21st century America, it feels like everyone has to pick a side and suit up for war.

But I’m not anti-LLM / anti-ML / anti-AI. I’m anti-hype. And I’m pro-fundamentals. I’m a fan of crawling before walking before running before biking before driving before flying.

So what am I advocating?

Well, for a good example, let’s pay attention to the work presented by U.S. Digital Response’s Marcie Chin at the #CfASummit this past week.

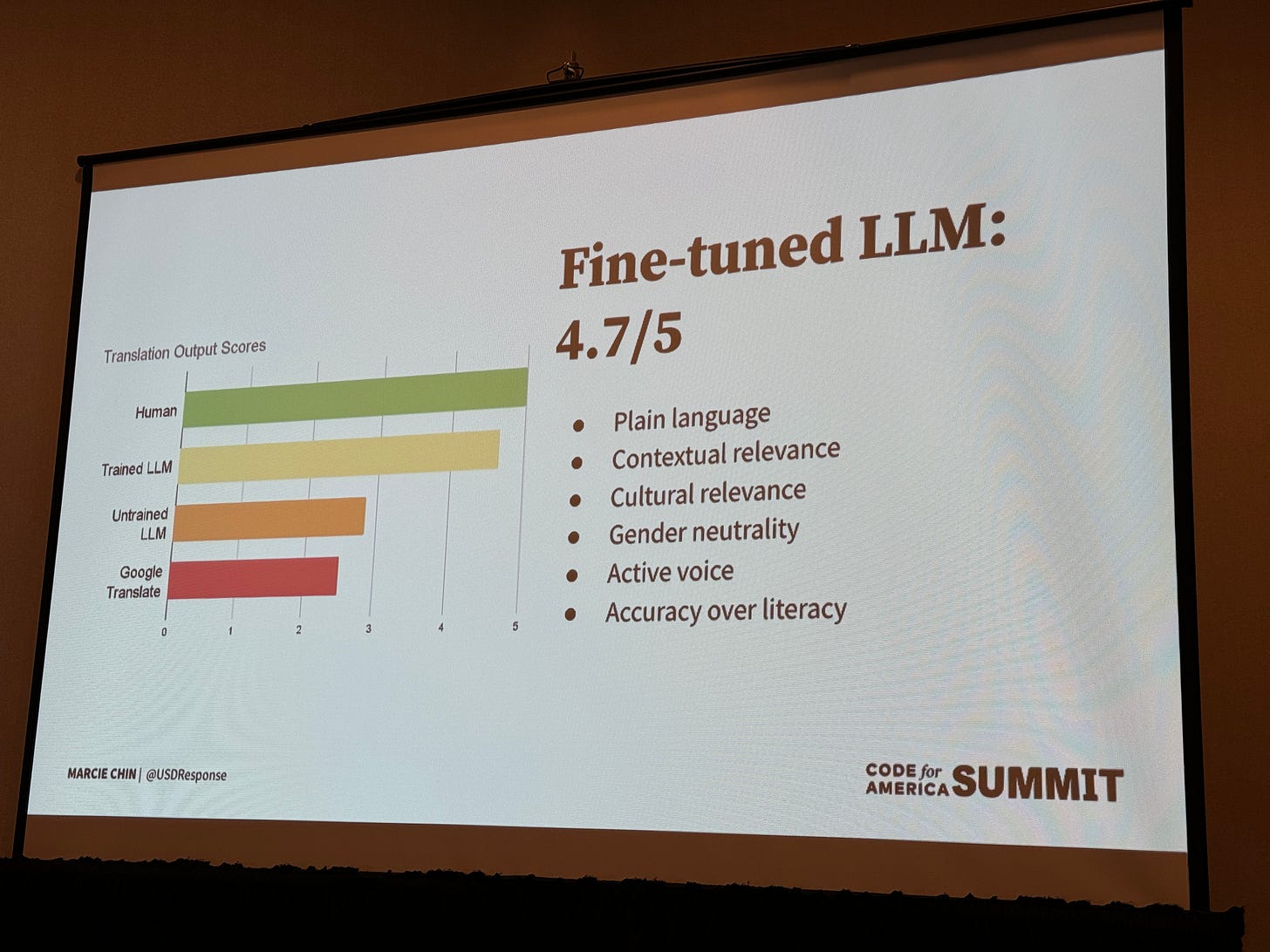

In the talk Marcie presented alongside colleagues from New Jersey, they called out using LLM-based ChatGPT to assist with translations of Unemployment Insurance forms and service explanations into Spanish. But they didn’t just slap some ChatGPT Spanish on a web page and walk away. They carefully analyzed what was going on and made choices about how to apply a mix of technologies and human techniques to get the best results for real Spanish-speaking users navigating the arcane world of unemployment. They used real humans to control the final product, but still tapped into AI tools to generate some initial inputs.

That’s how they got these results (among others):

Their bottom line? LLM-style AI tools can speed up development of text products, including translations, but for the best and most reliable experience—especially for multiple Spanish dialects—the work still requires human intervention. An automated AI translation is better than nothing, but that can’t be a government team’s stopping point. Not if they want the services to actually reach the intended recipients. Not if they care.

A path forward

Perhaps my conclusion is obvious. Maybe I’m wasting my time writing all this, as I’m sure others have said something like this before. I certainly don’t feel like I have a corner on the market for a reasonable take.

No more boosters. No more hand-wringers.

But it feels like the only voices I hear out there are the boosters and hand-wringers. AI will kill us all or AI will save us all. And it’s all just nonsense when you look at the tech and the (greedy) people promoting it.

As I noted on LinkedIn recently, journalist Julia Angwin had a ridiculously smart take on what the AI players are doing in the market today (lying) and how that's going (emphases mine):

The [AI] companies... did themselves a disservice by coming out with this technology and saying, "It's so good... the main concern here is that it's actually going to take over the world and kill humans." When you start with that level of hype it's actually really hard to walk back to where it can barely answer a question accurately. I'm sure it seemed like a great marketing strategy at the time because it did make [AI] seem so sexy and dangerous...

I honestly think that OpenAI disbanding their [safety] team is a little bit of an acknowledgement, like "that isn't actually the issue we're facing here right now. We are actually facing the fact that it's kind of unreliable, it's not consistently accurate, and we have to kind of solve those problems."

—as heard on the Pivot podcast

Even the loudest-mouthed proponents of generative AI are realizing the limits of their creation.

Where to go in civic tech

The path forward for AI in civic tech is kinda simple, really:

Before any substantial investments in AI tech, invest in the fundamentals first.

Get good at HCD, UX, CX, Agile, user training, low-code tools, plain language writing, basic web pages and information architecture, and so forth.

Get your data sets cleaned up, centralized, and get your data analytics capabilities on point. You need this to make a lot of AI tools work anyway.

Before translating anything, fix the damn English! Make your English language content plain, readable, and comprehensible to non-government types.

Pay attention to developments in AI via news articles, but stay skeptical and demand to “see the receipts.”

What was actually done with AI, and how did the tech play into the solution?

Did humans check any of the results?

How much processing energy did the AI solution take? What’s the environmental impact?

If civic tech colleagues are talking about AI, make sure to dig into the practical details in conversation with them, to find out if what they are describing is relevant to your real-world issues.

For example, Marcie at USDR had a very detailed story to tell because they used AI tools, tested the results, checked with human translators, and compared multiple approaches. They did the work. That’s the standard.

If it feels like a presenter is imagining the future rather than pointing to real work done in the real world for real people, take it with a block of rock salt.

Read sample AI policies and guidelines from multiple sources and develop guidelines that are meaningful and useful to your own organization.

Set a schedule to review/revise your policies / guidelines every 6 months, for the foreseeable future.

Depending on the scale of your organization, consider hiring a role that focuses on AI usage, whether the AI use is sanctioned or not. Gather info on use cases. Classify the AI types (some “AI” isn’t really AI). Educate government leaders on AI topics and concepts.

And I’m sure there are more recommendations I could offer, but all that is plenty to do. (But share your ideas in the comments.)

Final thoughts

The following post on Bluesky got me thinking about how we anthropomorphize soooooo hard about inanimate things, including AI:

Bingo. All the talk of AI being a savior or a demon are just us humans wanting to believe our own press. “Wouldn’t it be cool if we were gods?"

It’s time these lofty notions are brought back down to Earth. Not because that will “save” us as a species, but because it will save us all the energy we’ll otherwise expend gaslighting ourselves for the next 10 years.

I still think LLM-style AIs have targeted utility, but turning civic tech upside down to put AI at the center of our work will prove to be wasteful at best and folly at worst. I hope Code for America and all of us will slow down and stay focused on the fundamentals of building a civic tech ecosystem that solves human-scale problems humanely.

Perhaps writer Mims says it best in the WSJ article:

None of this is to say that today’s AI won’t, in the long run, transform all sorts of jobs and industries. The problem is that the current level of investment—in startups and by big companies—seems to be predicated on the idea that AI is going to get so much better, so fast, and be adopted so quickly that its impact on our lives and the economy is hard to comprehend.

Mounting evidence suggests that won’t be the case.

So let’s breathe a little easier, focus on digital fundamentals, data analytics fundamentals, collaboration and workflows, and keep our eyes open for targeted opportunities—particularly in text processing—where LLMs or other AI tools can help speed up some processes.

I’ll be happy to attend more conference sessions where folks show what they actually achieved with AI tools in the real world, and how they kept a tight leash on the tech and their own expectations.

Am I nuts? Did I miss an entire idea? Hit me up in the comments.

Well stated, John! I could not agree with you more on making sure you’ve got your fundamentals in place before moving on to higher-order stuff in the digital realm. I’ll be at a govcomm conference this week, where a good friend will be doing a session on how she’s using AI in her everyday work. I know she’s helped me learned how to use ChatGPT for text processing tasks, which I have found really helpful. I’ll be sharing what I learn over on my Substack, Good Government Files. Thanks for all you’re doing in the GX realm. It is truly important work for those of us in public service.